Scraping the web is a powerful tool for data collection and analysis, allowing users to gather large amounts of information automatically. However, this process can often lead to being blocked or banned by websites due to high traffic from a single IP address or for violating terms of service. This is where proxies come into play, offering a solution to these challenges.

Advantages of Using Proxies

Increased Anonymity

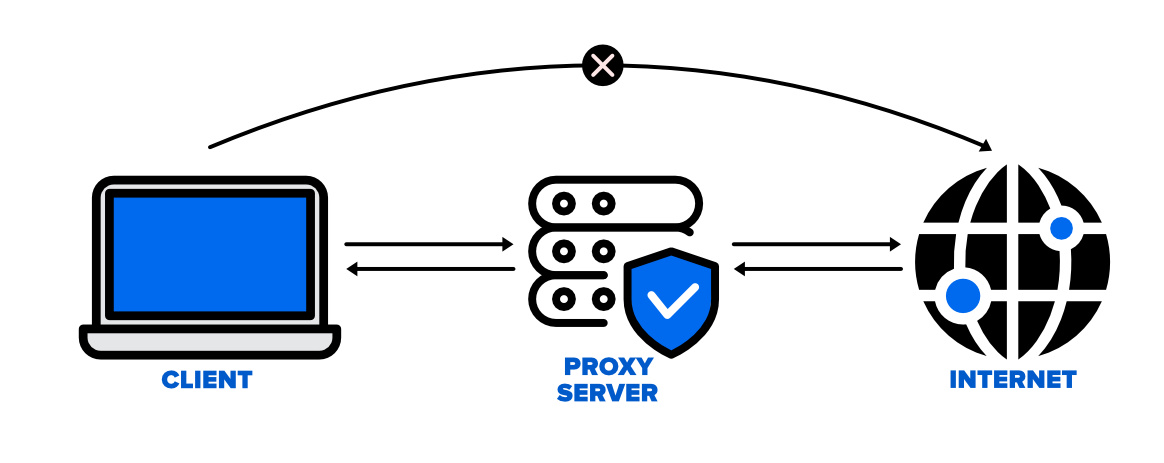

Using a proxy for scraping provides a layer of anonymity, masking your real IP address with another, thus reducing the risk of detection and blocking. This allows for more efficient data collection without compromising the user's identity or location.

Enhanced Security

Proxies act as an intermediary between your server and the websites you scrape, adding an extra layer of security. They can encrypt your requests, protecting your data and privacy from potential eavesdroppers and hackers.

Improved Efficiency and Speed

Proxies can cache data, which means they temporarily store web pages. When multiple requests are made to the same page, a proxy can serve the cached version instead of retrieving it from the source again, significantly reducing loading times and improving scraping speed.

Overcoming Geo-restrictions

Some websites display different content or are only accessible in certain regions. Proxies, especially those with servers in multiple countries, can bypass these geo-restrictions, enabling access to a wider range of data.

Load Balancing

By distributing requests across several proxies, you can avoid overloading any single server, which reduces the risk of being identified and banned by target websites. This approach also enhances the overall efficiency and reliability of your scraping operation.

Cost-Effective Scaling

Proxies allow for the cost-effective scaling of data scraping operations. Instead of investing in more hardware or sophisticated software, you can use proxies to handle increased loads, making it easier to expand your data collection efforts without a significant increase in cost.

Key Metrics to Consider

When selecting a proxy for scraping, consider the following metrics to ensure optimal performance and cost-efficiency:

- Speed: Look for proxies that offer high speeds to minimize data collection times.

- Reliability: Choose proxies known for their uptime and consistent performance.

- Cost: Evaluate the pricing models of different proxy providers to find one that fits your budget. Remember, the cheapest option isn't always the best in terms of quality and reliability.

- Geographical Coverage: Ensure the proxy provider offers IP addresses in the regions you intend to scrape data from.

By incorporating proxies into your web scraping strategy, you can enhance data collection efforts, improve efficiency, and significantly reduce the risk of being blocked. For a reliable proxy solution, consider using proxy scraper, which offers a range of features designed to optimize your scraping operations.